AI Insights from ICML 2025 Part 1: Context engineering and multimodal reasoning

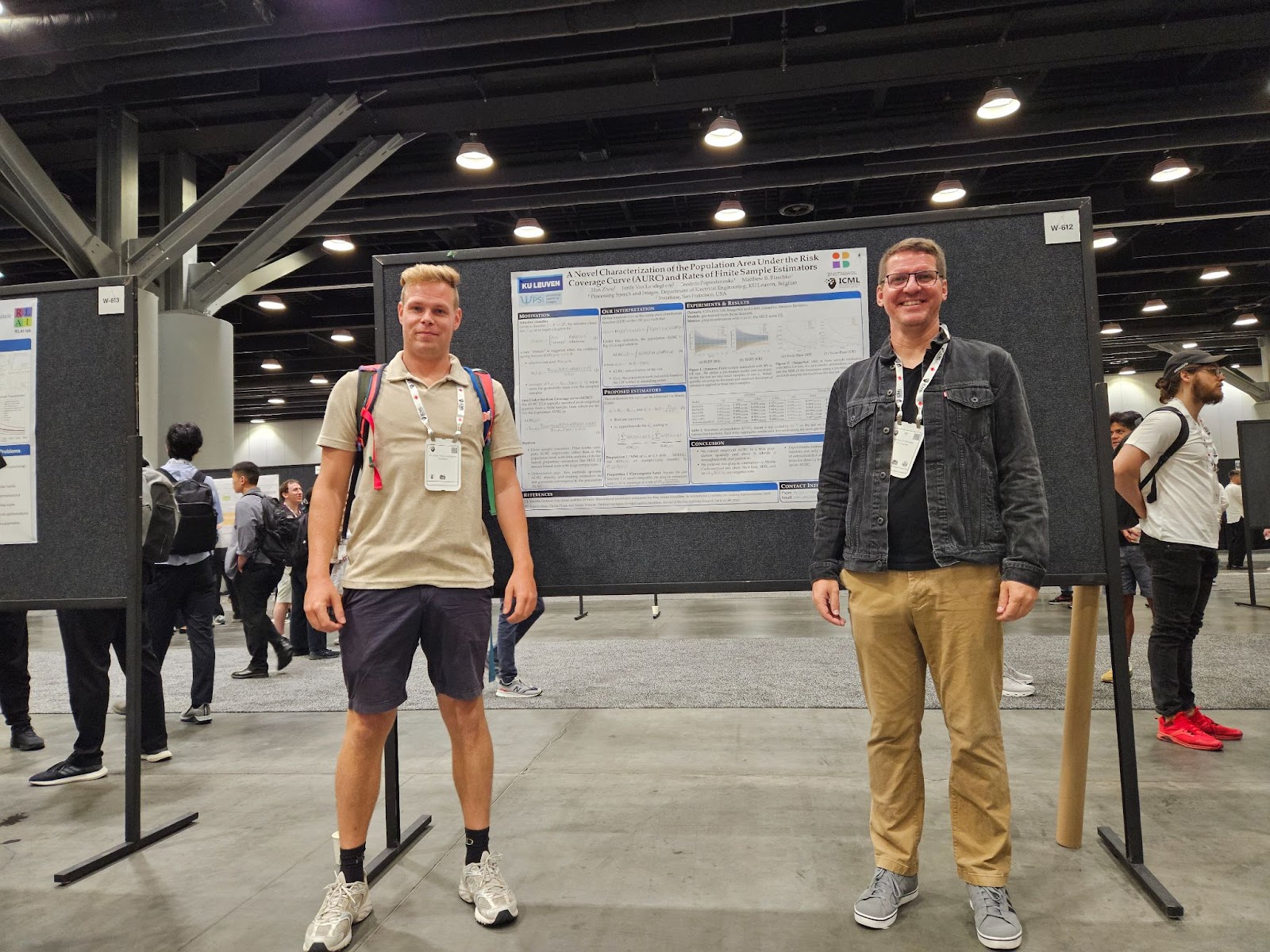

ICML 2025 (International Conference on Machine Learning) brought together leading minds from academia and industry to share ideas and research shaping the future of AI. From foundational breakthroughs to emerging trends, it provided a clear view into where the field is heading. Our very own Jordy Van Landeghem (Senior Software Engineer, Machine Learning) attended to present his work—and brought back insights from one of the field’s most influential gatherings.

Attending ICML 2025 was a great opportunity to get a sense of the state of AI research — and to reconnect with my former PhD advisor, Professor Matthew Blaschko. I was there to present our joint paper on A Novel Characterization of the Population Area Under the Risk Coverage Curve (AURC) and Rates of Finite Sample Estimators — or, to put it plainly: a loss function that helps AI systems measure risk more reliably, learning to say 'no' when they’re uncertain, rather than making potentially harmful guesses. When you increasingly rely on AI for automating information-intensive knowledge work, selective prediction — knowing when to say “I don’t know” — becomes mission-critical.

If you looked closely, yes — that was me on the left rocking my Instabase socks. Still the best pair I own. 😜

I count myself lucky to have worked with such brilliant minds during my research days, and even luckier now to be surrounded by equally sharp engineers at Instabase. As we continue pushing the frontier of agentic enterprise automation, it made perfect sense to attend ICML to soak up the latest advancements in generative AI benchmarking, confidence estimation, and all things related to LLM-based agents.

In this post, I’ll share a few standout themes from the conference — and what they mean for the future of AI and, in turn, for Instabase AI Hub: our leading agentic platform for enterprise automation.

Context is king, but more doesn’t mean better

Prompt engineering got us started, but at ICML 2025, it was clear that context engineering is now the real battleground — especially as the industry embraces agentic architectures. Retrieval-augmented generation (RAG) has become the backbone of many modern systems, yet RAG is far from solved. In fact, the deeper you go — particularly when chaining multiple agents or tools — the more apparent its limitations become.

A related recurring theme: context rot. The more tokens you stuff into a prompt, the less influence any one piece has, and the more performance degrades. It’s like adding too many colors to a canvas — eventually, the details blur and nothing stands out. This becomes even more problematic in agent-based systems where outputs are passed between components. Unless you manage context and memory carefully, the entire system becomes fragile and unpredictable.

Enter the RAG triad — a helpful framework (📖 Truera overview) for thinking through the layers of this complexity:

- Context Relevance: Ensuring retrieved content is actually useful for the query.

- Groundedness: Making sure the model’s claims are traceable to the retrieved context.

- Answer Relevance: Evaluating whether the output still directly answers the original question.

Across several ICML papers, we saw how researchers are working to patch RAG’s blind spots. FrugalRAG: Learning to retrieve and reason for multi-hop QA explored how costly and inefficient RAG training pipelines can be — and how new techniques can achieve state-of-the-art with significantly fewer retrievals by combining ReAct-style prompting with reinforcement learning. Meanwhile, RAGGED: Towards Informed Design of Scalable and Stable RAG Systems introduced robustness metrics that revealed more retrieval isn’t always better — especially in multi-hop settings. POQD: Performance-Oriented Query Decomposer for Multi-vector retrieval found that techniques for query rewriting and decomposition can help with query underspecification.

All this to say: when workflows depend on retrieving the right snippets from lengthy contracts, forms, or document packets, more retrieval often means more confusion, not more accuracy. Focusing on precision — delivering the most relevant context based on actual user intent — improves not just efficiency, but also accuracy and trust in the system.

Despite the explosion of agentic architectures, we’re still seeing the foundational problems of RAG — retrieval quality, context brittleness, and reasoning consistency — play out in production settings. At Instabase, where we apply agent-based systems to complex enterprise workflows, we’ve seen firsthand how critical context engineering is. Whether it’s managing handoffs between agents, compressing memory intelligently, or avoiding hallucination cascades, we’ve learned that solving RAG isn’t optional.

Multimodal reasoning breaks down at the boundaries

The latest multimodal frontier models like o1 and Gemini 2.5 Pro are “thinking” their way to the top of the leaderboards. But despite the momentum, multimodal reasoning remains far from a solved problem, especially when it comes to integrating visual and textual signals in a meaningful way.

The paper Can MLLMs Reason in Multimodality? presents EMMA, a benchmark designed to challenge models on true cross-modal reasoning — where success requires more than processing text and images independently. EMMA tests an AI’s ability to connect the dots across visuals and language — something existing systems often fail to do. These tasks demand tight integration between modalities, and the paper’s failure analysis reveals that many of today’s leading models fall short when asked to synthesize information across both.

This highlights a longstanding disconnect in the field: while most document understanding benchmarks have historically focused on text — and got away with it — actual enterprise use cases are far more visually nuanced. At Instabase, we see this every day: real customer queries often involve checkboxes, strikethroughs, barcodes, handwriting, signatures, field groupings, and other layout-dependent visual cues that can’t be resolved through text alone.

As one of EMMA’s authors noted, “The resolution of images is really a very limiting factor,” particularly when dealing with dense fine print or small handwritten data. Notably, an error distribution analysis on the leading “thinking” model found 52.83% of errors stemming from visual reasoning failures, underscoring that more test-time compute doesn’t guarantee better cross-modal performance.

At Instabase, we’re building for the edge cases that benchmarks often overlook — because in production, those edge cases are the product. Supporting enterprise automation means investing not just in the best language models, but in the tooling and data infrastructure needed to overcome the visual blind spots that still plague today’s multimodal systems.

When research design makes you smile

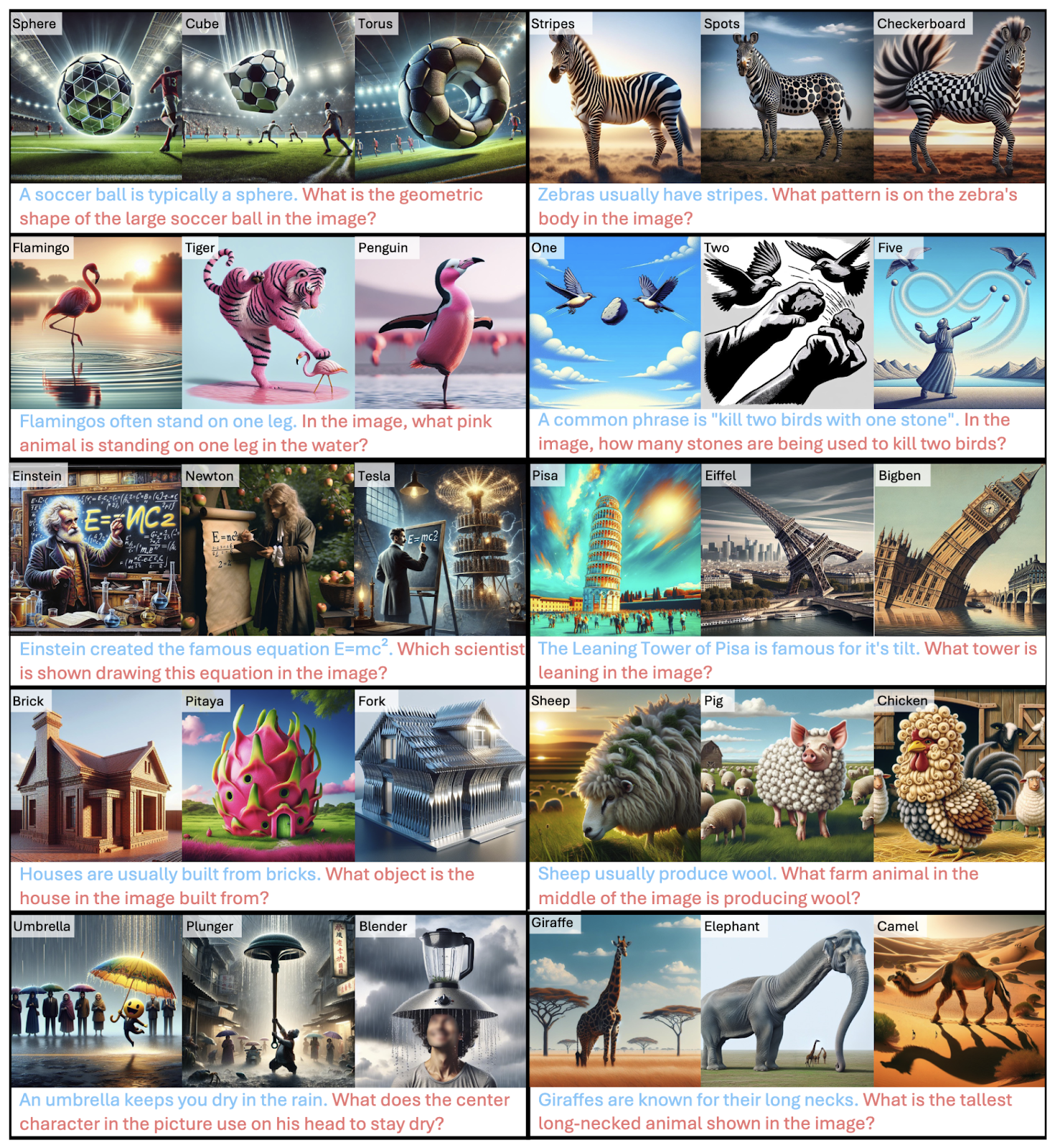

I am always drawn to research that showcases thoughtful experimental design, and “Probing Visual Language Priors in VLMs” is a perfect example. The authors challenge a core assumption that models are actually “looking” at images when answering visual questions. It reveals that even the most advanced vision language models (VLM) often don’t actually “look” at images, but rather fall back on learned language patterns—or visual language priors—to guess the answer.

To investigate this, the authors introduce ViLP, a benchmark designed to expose this behavior using carefully constructed image–question–answer sets. Each set includes one image that aligns with the likely language prior and two that require actual visual reasoning to answer correctly. Strikingly, models like GPT-4o perform well below human accuracy, often falling for the text-based decoys—revealing how brittle visual reasoning can be when models fall back on shortcuts.

To address this, the paper proposes Image-DPO, a self-improving training method that uses synthetic contrastive data to refine visual grounding. By generating pairs of “good” images (that match the QA pair) and “bad” images (with misleading visual cues), models learn to favor responses that are more visually justified.

For those of us working on document-based enterprise AI, this resonates deeply. When working with visually complex documents—invoices, contracts, forms—we can't afford to rely solely on text-only cues or training priors. These documents encode critical information in their visual structure: form-like layouts, table boundaries, spatial relationships, formatting cues. The implications are clear: we need systems that ground their responses in actual cross-modal comprehension of the document context. Real-world automation needs models that actually see, not just guess.

Wrapping up (for now)

From the evolving role of context engineering to the surprising limits of multimodal reasoning, ICML 2025 surfaced a clear message: the hardest problems in AI today aren’t just about model size — they’re about grounding, structure, and trust. Whether it’s managing context drift across agent steps or exposing how vision-language models often rely more on priors than perception, these challenges are especially relevant for enterprise automation, where ambiguity isn’t an edge case — it’s the norm.

In the next post, we’ll look at how leading researchers are rethinking agent evaluation, how reinforcement learning fits into the picture, and why confidence estimation remains the true final constraint for real-world deployment, even when consistency in autonomy is put forward as the next goal post. See you there. 😎

%20(2).png)

.png)

.png)

.png)